The General Dynamics Griffin III, a leading candidate for the Army’s Optionally Manned Fighting Vehicle, mounts the same 50 mm gun that would be used in the ATLAS automated turret.

UPDATED with praise from Robert Work, criticism from Stuart Russell WASHINGTON: The Army’s acquisition chief, Bruce Jette, has ordered the service’s famed night vision lab to develop an experimental “automated turret” for live-fire testing next June. Army sources confirmed to Breaking Defense that the turret will use the lab’s Artificially Intelligent Targeting System (ATLAS), designed to detect potential targets, determine if they’re hostile and aim a 50mm cannon with superhuman speed and accuracy.

But — note the pause — ATLAS will not pull the trigger because it will not have a physical connection to the trigger mechanism, leaving the final decision to fire literally in human hands.

Bruce Jette

In the future, however, Jette told me in an interview, the Army might explore a less hands-on form of control. A human officer might, for example, look at surveillance data, like live imagery from a drone, and clear a platoon of robots to open fire on a whole group of targets, he said. (This would appear to meet the standard of having a human “on the loop” — what the Pentagon calls “human-supervised autonomous weapon systems” — if not strictly “in the loop.”) While Jette didn’t discuss the technical details, this approach would require the kind of automated trigger that ATLAS currently lacks.

Even this set-up probably wouldn’t violate the Defense Department’s remarkably nuanced policy requiring human control of lethal force, and the Defense Secretary could waive the policy if it did. But it would certainly alarm activists who are already skeptical of ATLAS, arguing it could easily be modified to bypass human control, and who seek a global ban on lethal autonomous weapons systems. The danger of such systems is not just that they might run rogue and kill the wrong people, skeptics say, but that a human overseer might blindly trust what the computer tells him — a phenomenon known as automation bias.

Jette, the head of Army acquisition (ASAALT), first publicly mentioned what he called the “fully autonomous turret” in a talk last week to the Association of the US Army. These initial remarks left some vital details vague, but Army staff and Jette himself clarified them later. The service’s Night Vision & Electronic Sensors Directorate will provide the automation, the Armaments Center the physical weapon, ammunition, and turret; both are now part of Army Futures Command.

Jette assured me in an interview that Army engineers will take every safety precaution and that the final product might actually be more discriminate than a weapon relying entirely on human brainpower. “I understand concerns about out-of-control turrets and stuff like that,” he told me. “The thing about it is, soldiers …. because they’re under extreme stress in a combat environment, may make more mistakes than robots if I put the parametrics in place correctly.”

The first phase of development will focus on making the automated system as fast as possible, Jette said. But the system will be thoroughly tested and debugged, and safety features and human controls will be added before it’s connected to an actual weapon for a “dry fire” run without ammunition, let alone the live-fire test tentatively scheduled for June 2020.

“Before we put any ammunition in or anything like that, we’ll have the safeties put in place,” Jette said. “You make sure you search every piece of code…and do regression analysis… because we want no unexpected behavior patterns before we ever turn this into a live-fire event.”

Only after extensive testing will the Army even consider fielding an operational system, Jette said. While ATLAS uses the same 50mm cannon envisioned for the future Optionally Manned Fighting Vehicle — basically, a self-driving replacement for the M2 Bradley — the Army hasn’t decided whether or not a robotic turret should go on the OMFV. “If it does,” Jette said, “it would be a while from now.”

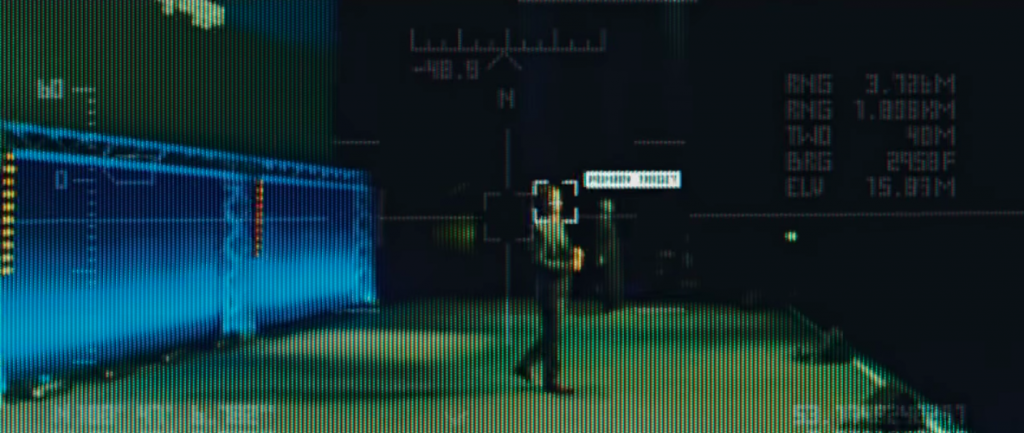

A drone’s eye view of the target in the anti-lethal AI video “Slaughterbots”

Critics & Cheerleaders

UPDATE BEGINS Berkeley scientist and activist Stuart Russell, one of the leading critics of ATLAS, was quick to register his dismay. “It gives me a sinking feeling that we are rapidly sliding down the slippery slope,” Russell told me in an email. “If they are explicitly contemplating a human authorizing an attack on a whole group of targets, then the algorithm is making the target recognition and firing decisions. After that it’s a short journey to fully autonomous tanks, ground-attack air vehicles, etc.”

Stuart Russell

“You can justify anything by saying ‘What if our enemy does it first?'” Russel said. “That doesn’t make it true and doesn’t relieve you of the obligation to pursue diplomacy rather than an arms race.”

Robert Work

On the other side of the debate, former Deputy Secretary of Defense Bob Work –whose “Third Offset Strategy” did more than any other individual to get the Pentagon to embrace AI and robotics — was quick to praise the project. “I applaud Secretary Jette’s initiative,” Work emailed after seeing the original version of this article. “This is the logical first step in learning the possibilities and limitations of AI-enabled weapons systems with some types of autonomous functionality.”

“You have to see how they perform in the real world. You have to gather data to train the systems to perform its assigned tasks better. You have to determine if the algorithms can be trusted to do what they were designed to do, how susceptible they are to error,” Work continued. “Once you do all these things and have established operational trust with the system, the debate over what tasks to assign to it can take place, informed by hard data and not supposition.” UPDATE ENDS

Experimental robotic M113 armored vehicle (with human monitor aboard for safety) at Camp Grayling in 2017

Speed Kills

Why would you want robotic weapons, anyway? It’s simple: they’re quicker on the draw.

While most military officers are leery of robotic weapons, Jette is not the first Pentagon leader to publicly argue they may have an insurmountable advantage on speed. Under Obama, the top acquisitions and technology official for the Defense Department, Frank Kendall, publicly suggested that if adversaries removed slow-thinking humans from their weapons control systems, the US might have to do so as well.

Even earlier, the Navy implemented an automatic mode on its Aegis fire control system. While intended to handle overwhelming waves of incoming missiles coming in too fast for human operators to respond, the automated Aegis could also shoot down manned aircraft. The Army itself is now installing the Israeli-made Trophy and Iron Fist Active Protection Systems on its M1 Abrams and m2 Bradleys, which detect and shoots down incoming anti-tank rockets and missiles — a function that has to be fully automated because human reaction times are just too slow.

Navy Aegis ships firing. The Aegis fire control system already has a fully automated mode — but for anti-aircraft and missile defense, not ground targets.

Jette, however, is talking about automating the identification of ground vehicles, which almost always have human beings inside. But he believes ceding some human functions to computers may be the only way to move fast enough in a future fight. A former Army armor officer himself, he would have been well-trained in the historical truth that victory in tank battles typically goes, not to the biggest gun or thickest armor, but the side that sees the enemy first and takes the first shot.

“Time is the weapon,” Jette told me at the start of an interview on the system. “What I want to do, I want to find out how fast we can get the system to work.”

How fast? “The lights in this room are on 60 hertz,” Jette said last week at AUSA headquarters in Arlington. That means standard fluorescents flicker on and off about 60 times a second. We just don’t notice because our brains can’t take in information that fast: Human neurons also run at roughly 60 hertz. (If you notice fluorescents flickering, they’re running at 50 Hz or less). A fly’s brain is much smaller than a humans, but it processes visual information faster, about 80 Hz, which is why it’s so difficult to swat one.

Likewise, Jette argued, the computerized turret, while not smarter than a human, will think faster — enabling it to set up that critical first shot: “It’ll be as fast as a fly.”

Predator drone operators.

‘There Would Always Be A Man’

While computers will provide speed, however, humans are still needed to provide judgment.

“There would always be a man, we can call it ‘in the loop’ or ‘on the loop,‘” Jette told me. “In the loop would be, it pops a target up, it says ‘hey, I want to shoot something,’ and then you say ‘yes’ [or no….] You’re not doing the fire control, you’re not doing the aiming, but you are confirming you want the shot taken.”

With “a man on the loop,” Jette continued, “the system comes up, identifies, let’s say, five targets, [and] the man on the loop simply confirms you’re authorized to shoot and engage the targets in the sequence you’ve proposed. And so the gun then automatically engages the five targets — but only the five targets that have been approved.”

A Tesla wrecked after a fatal accident in which its driver put too much faith in its Autopilot self-driving system.

The human commander might never have their eyes directly on the target, Jette said, but “it’s not like it’s just a dot on the screen [as it is with Aegis radar scopes — ed.]. The ISR [Intelligence, Surveillance, & Reconnaissance] may be a visual image of the target…It’s got to be some representation of the actual target that allows the user, the controller, to be able to apply his or her analytic competencies to the problem.”

Just how the targets are presented to the human users, and how those humans are trained, raises the danger of automation bias. That’s the marked tendency of humans to blindly follow whatever a computer outputs rather than questioning its conclusions. When an ATLAS gunner is presented with a list of targets, you don’t want him hitting “fire” as reflexively as a smartphone user hits “accept” when presented with the latest update to the Terms & Conditions.

In extreme cases, human users stop paying attention entirely to automated systems that still require their oversight, like the Tesla driver who let his Autopilot feature drive him into a truck. The best protection against this kind of artificial stupidity? A carefully designed interface and intensive training to keep the human constantly engaged.

“We don’t envision sending robots out there while we head off to lunch,” Jette emphasized. “It’s an augmentation to the individual, it is not a replacement for the individual.”

That said, Jette does envision replacing some individuals while augmenting others. Even if the human controller is physically in the same vehicle with the robotic turret, the automation would at least replace the human loader — something most Western armies have long resisted — and possibly the entire turret crew, both the gunner and the commander. (The command role would presumably pass to the fourth and final crewman, the driver, who’s seated down in the main hull).

Army M1 Abrams tank with a trial installation of the Israeli-made Trophy, an automated Active Protection System (APS) to shoot down incoming anti-tank rockets and missiles.

“I got a lot of resistance,” Jette admitted at the AUSA event, which was focused on logistics. “If you take three people out of every one of those tanks, who’s going to maintain the tank?”

Tankers, like most troops, spend far more time keeping their weapons in working order than actually firing them. While a one- or two-person tank with an automated turret could be smaller than a four-person machine, it’s unlikely to be less mechanically complex.

Jette’s idea for a single human overseeing “a platoon of unmanned guns” pushes the ratio of humans to machines even lower. Such vehicles might need a maintenance and support system more like fighter aircraft, which have a separate ground crew rather than relying primarily on the pilot.

Those are the kind of logistical concerns, Jette told the AUSA gathering, that weapons programs need to consider at the earliest stages. But they’re still easier than the tactical and ethical questions of automating, even partially, the killing of human beings.

Sullivan says Ukraine supplemental should cover all of 2024, long-range ATACMS now in Ukraine

“We now have a significant number of ATACMS coming off their production line and entering US stocks,” Jake Sullivan said today. “And as a result, we can move forward with providing the ATACMS while also sustaining the readiness of the US armed forces.”