A soldier holds a PD-100 mini-drone during the PACMAN-I experiment in Hawaii.

APPLIED PHYSICS LABORATORY: “Brothers and sisters, my name is Bob Work, and I have sinned,” the Deputy Secretary of Defense said to laughter. There’s widespread agreement in the military that artificial intelligence, robotics, and human-machine teaming will change the way that war is waged, Work told an AI conference here Thursday, “but I am starting to believe very, very deeply that it is also going to change the nature of war.”

Robert Work

“There’s no greater sin in the profession” than to suggest that new technology could change the “immutable” nature of human conflict, rather than just change the tools with which it’s waged, Work acknowledged. (He wryly noted he’d waited to make this statement until “my boss, the warrior monk, happens to be out of the country”). But Work is both a classically trained Marine Corps officer and the Pentagon’s foremost advocate of artificial intelligence.

“The nature of war is all about a collision of will, fear, uncertainty, and chance, Work said, summarizing Clausewitz. “You have to ask yourself, how does fear play out in a world when a lot of the action is taking place between unmanned systems?”

Human fallibility is central to Clausewitz and to classic theories of war as far back as Sun Tzu. But if machines start making the decisions, unswayed by fear, rage, or pride, how does that change the fundamental calculus of conflict?

Carl von Clausewitz

“Uncertainty is going to be different now,” Work went on. While he didn’t use the utopian language of millennial Revolution in Military Affairs — whose promise to “lift the fog of war” with high-tech sensors failed utterly in Afghanistan and Iraq — Work did argue that computerized decision-making aids could help commanders see with greater clarity.

“Clausewitz had a term called coup d’oeil,” Work said, essentially a great commander’s intuitive grasp of what was happening on the battlefield. It’s a quality Clausewitz and Napoleon considered innate, individual, impossible to replicate, but, Work said, “learning machines are going to give more and more commanders coup d’oeil.”

That said, uncertainty isn’t going to go away, Work said. We could guess the capabilities of a new Russian tank by watching it parade across Red Square; an adversary’s new AI will only reveal its true nature in battle. “Surprise is going to be endemic, because a lot of the advances that the other people are doing on their weapons systems, we won’t see until we fight them,” Work said, “and if they have artificial intelligence then that’s better than ours, that’s going to be a bad day.”

A Punisher unmanned ground vehicle follows a soldier during the PACMAN-I experiment in Hawaii.

Chaos Theory

Introducing artificial intelligence to the battlefield could create unprecedented uncertainty. The interactions of opposing AIs could form an increasingly unpredictable feedback loop, a military application of chaos theory.

“We’ve never gotten to the point where we’ve had enough narrow AI systems working together throughout a network for us to be able to see what type of interactions we might have,” Work said. (“Narrow” AI refers to programs that can equal human intelligence for a specific purpose; “general” AI would equal human intelligence in all aspects, an achievement so far found only in sci-fi).

So what’s the solution? In part, Work said, it’s the cautious, conservative Pentagon processes widely derided as obstacles to innovation. In particular, he pointed to “operational test and evaluation, where you convince yourself that the machines will do exactly what you expect them to, reliably and repeatedly.”

An unmanned surface vessel equipped for minesweeping at Unmanned Warrior 2016 off Scotland.

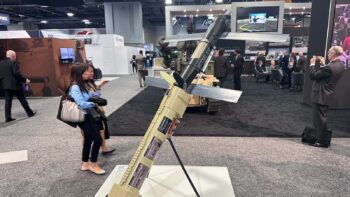

One crucial restraint we want our AIs to follow, Work emphasized, is that they won’t kill a target without specific orders from a human being. “You can envision a world of general (artificial) intelligence where a weapon might make those decisions, but we certain that we do not want to pursue that at this time,” he said.

“We are not going to design weapons that decide what target to hit,” he said. That doesn’t mean a human has to pull the trigger every time: “We’re going to say when we launch you, you can hit one of these five targets, and oh by the way, here’s the priority that we want to service them in; and if you don’t find the fifth target, you don’t get to decide if you’re going to go kill something else. You will either dive into the ocean or self-destruct.”

The problem with such self-imposed restrictions, of course, is that they put you at a disadvantage against adversaries who don’t share them. If we build our military AIs until we can predict their behavior in testing, will our enemies be able to predict their behavior on the battlefield? If we require our AIs to get permission from slow-thinking humans before opening fire, will our enemies out-draw us with AIs that shoot first and ask humans later?

The Civilians Speak

“If one country restrains itself to not develop artificial general intelligence or living AI….adversaries would have an incentive to develop more complex adaptive machines that would be out of control,” because it could give them a crushing advantage, said David Hanson, CEO of Hanson Robotics. Even outside the military field, Hanson said, “many companies are aspiring to make really complex adaptive AI that may not entirely be transparent and its very value is in the fact that it’s surprising.” AI is potentially more powerful — and profitable — than any other technology precisely because it can surprise its makers, finding solutions they’d never thought of.

No.

That’s also why it’s more dangerous. You don’t need a malevolent AI to cause problems, just a childishly single-minded AI that doesn’t realize its clever solution has an unfortunate side effect — such as, say, global extinction. Blogger Tim Urban lays out a thought experiment of an AI programmed to replicate human handwriting that wipes out humanity in order to maximize its supply of notepaper. In one experiment, Oxford University scholar Anders Sandberg told the APL conference, a prototype warehouse robot was programmed to put boxes down a chute. A surveillance camera monitored its progress so it could be turned off when appropriate — until the robot learned to block the camera so it could happily put all the boxes down the chute. It sounds like an adorable three-year-old playing, until you imagine the same thing happening with, say, missile launches.

We’re a long way away from an AI smart enough to be evil, said venture capitalist Jacob Vogelstein: “What’s much more likely to kill us all than an intelligent system that goes off and tries to plot and take over the world is some failure mode of an automated launch system (for example), not because it has some nefarious intention, just because someone screwed up the code.”

Anders Sandberg

“It’s very hard to control autonomy, not because it’s wild or because it wants to be free, (but because) we’re creating these complex, adaptive technological systems,” Sandberg said. Indeed, the most powerful and popular way to make an AI currently is not to program its intelligence line-by-line, but to create a “learning machine” and feed it lots of data so it can learn from experience and trial and error, like a human infant. Unfortunately, it’s very hard with such systems to understand exactly how they learned something or why they made a certain decision, let alone to predict their future actions.

“It’s generally a matter of engineering how much risk and uncertainty are you willing to handle,” said Sandberg. “In some domains, we might say, actually it’s pretty okay to try things and fail fast and learn from experience. In other systems, especially (involving) big missiles and explosions, you might want to be very conservative.”

What if our adversaries are willing to throw those dice? Work has confidence that American ingenuity and ethics will prevail, and that American machines working together with American humans will beat AIs designed by rigid authoritarians who suppress their own people’s creative potential. But he admits there is no guarantee.

“This is a competition,” Work said. “We’ll just have to wait and see how that competition unfolds, and we’ll have to go very, very carefully.”

This story is part of our new series, “The War Algorithm.” Click here to read Colin’s introduction.

AeroVironment purchases BlueHalo to ‘redefine the next era’ of defense technology

“The structure of the global defense sector is changing, and this transaction underscores that transformation,” said analyst Byron Callan of the merger.

![E-2D_AR_1[1]](https://breakingdefense.com/wp-content/uploads/sites/3/2024/10/E-2D_AR_11-350x233.png)