Daesh fighters

THE NEWSEUM: Artificial intelligence is coming soon to a battlefield near you — with plenty of help from the private sector. Within six months the US military will start using commercial AI algorithms to sort through its masses of intelligence data on the Islamic State.

“We will put an algorithm into a combat zone before the end of this calendar year, and the only way to do that is with commercial partners,” said Col. Drew Cukor.

Air Force intelligence analysts at work.

Millions of Humans?

How big a deal is this? Don’t let the lack of general’s stars on Col. Cukor’s shoulders lead you to underestimate his importance. He heads the Algorithmic Warfare Cross Function Team, personally created by outgoing Deputy Defense Secretary Bob Work to apply AI to sorting the digital deluge of intelligence data.

This isn’t a multi-year program to develop the perfect solution: “The state of the art is good enough for the government,” he said at the DefenseOne technology conference here this morning. Existing commercial technology can be integrated onto existing government systems.

“We’re not talking about three million lines of code,” Cukor said. “We’re talking about 75 lines of code… placed inside of a larger software (architecture)” that already exists for intelligence-gathering.

For decades, the US military has invested in better sensors to gather more intelligence, better networks to transmit that data, and more humans to stare at the information until they find something. “Our work force is frankly overwhelmed by the amount of data,” Cukor said. The problem, he noted, is “staring at things for long periods of time is clearly not what humans were designed for.” U.S. analysts can’t get to all the data we collect, and we can’t calculate how much their bleary eyes miss of what they do look at.

We can’t keep throwing people at the problem. At the National Geospatial Intelligence Agency, for example, NGA mission integration director Scott Currie told the conference, “if we looked at the proliferation of the new satellites over time, and we continue to do business the way we do, we’d have to hire two million more imagery analysts.”

Rather than hire the entire population of, say, Houston, Currie continued, “we need to move towards services and algorithms and machine learning, (but) We need industry’s help to get there because we cannot possibly do it ourselves.”

Private Sector Partners

Cukor’s task force is now spearheading this effort across the Defense Department. “We’re working with him and his team,” said Dale Ormond, principal director for research in the Office of the Secretary of Defense. “We’re bringing to bear the combined expertise of our laboratory system across the Department of Defense complex.

“We’re holding a workshop in a couple of weeks…to baseline where we are both in industry and with our laboratories,” Ormond told the conference. “Then we’re going to have a closed door session (to decide) what are the investments we need to make as a department, what is industry doing (already).”

Just as the Pentagon needs the private sector to lead the way, Cukor noted, many promising but struggling start-ups need government funding to succeed. While Tesla, Google, GM, and other investors in self-driving cars are lavishly funding work on artificial vision for collision avoidance, there’s a much smaller commercial market for other technologies such as object recognition. All a Google Car needs to know about a vehicle or a building is how to avoid crashing into it. A military AI needs to know whether it’s a civilian pickup or an ISIS technical with a machinegun in the truck bed, a hospital or a hideout.

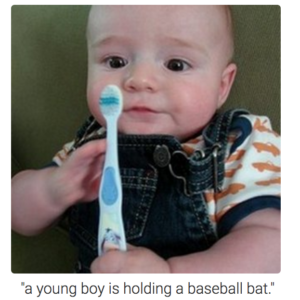

An example of the shortcomings of artificial intelligence when it comes to image recognition. (Andrej Karpathy, Li Fei-Fei, Stanford University)

These are not insurmountable problems, Cukor emphasized. The Algorithmic Warfare project is focused on defeating Daesh, he said, not on recognizing every weapon and vehicle in, say, the Russian order of battle. He believes there are only “about 38 classes of objects” the software will need to distinguish.

It’s not easy to program an artificial intelligence to tell objects apart, however. There’s no single Platonic ideal of a terrorist you can upload for the AI to compare real-life imagery against. Instead, modern machine learning techniques feed the AI lots of different real-world data — the more the better — until it learns by trial and error what features every object of a given type has in common. It’s basically the way a toddler learns the difference between a car and a train (protip: count the wheels). This process goes much faster when humans have already labeled what data goes in what category.

“These algorithms need large data sets, and we’re just starting labeling,” Cukor said. “It’s just a matter of how big our labeled data sets can get.” Some of this labeling must be done by government personnel, Cukor said; he didn’t say why, but presumably this includes the most highly classified material. But much of it is being outsourced to “a significant data-labeling company,” which he didn’t name.

This all adds up to a complex undertaking on a tight timeline — something the Pentagon historically does not do well. “I wish we could buy AI like we buy lettuce at Safeway, where we can walk in, swipe a credit card, and walk out,” Cukor said. “There are no shortcuts.”

Integrating commercial off-the-shelf computing on military platforms

Technology insertion into legacy platforms and electronics requires creativity in designing computer systems that can communicate with them.