Army HIMARS trucks launch Guided Multiple Launch Rocket System missiles

WASHINGTON: The military must not get so fixated on using artificial intelligence to find targets that it neglects its wider applications from deployment planning to escalation control, warns the new director of the Pentagon’s Joint AI Center.

In recent field tests, an experimental Army AI was able to find targets in satellite imagery and relay target coordinates to artillery in under 20 seconds. Accelerating the “kill chain” from detection to destruction this way is a powerful but narrow application of artificial intelligence, said Lt. Gen. Michael Groen, a Marine Corps intelligence officer who took over JAIC on Oct. 1st.

Lt. Gen. Michael Groen

Misapplication of AI raises the potential for “rapid escalation and strategic instability,” Groen told an NDIA conference last week. “That’s really where we have to…go back to ethical principles.”

The principles for military AI promulgated in February, Groen noted, require artificial intelligence to be “governable.” To quote that policy (the emphasis is ours): “The Department will design and engineer AI capabilities to fulfill their intended functions while possessing the ability to detect and avoid unintended consequences, and the ability to disengage or deactivate deployed systems that demonstrate unintended behavior.”

“Being able to take out 10 targets in rapid succession … that’s very exciting. It’s awesome. But it’s not enough,” Groen said. “We need to think through the human-machine team and how the machine is queuing up decisions for humans to make.”

“When I think about the artificial intelligence applications, I’m thinking beyond just the use case of near-instantaneous fires upon the detection of a target,” he said. “There’s a broad range of decision-making that has to occur across the joint force that can be enabled by AI…even your decisions about how you surge forces into theater and the sequencing of your maneuver on the ground.”

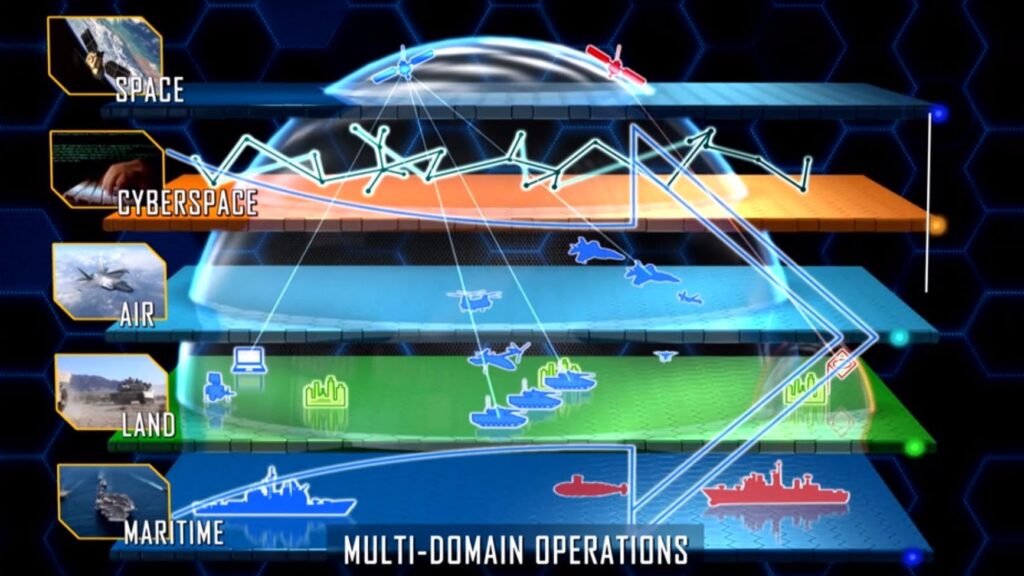

Groen was speaking to a conference on Joint All-Domain Command & Control (JADC2). That’s an awkward chunk of Pentagon jargon that refers, in essence, to a future meta-network linking US and allied forces across the five “domains” of land, sea, air, space, and cyberspace (and electronic warfare, which isn’t officially a domain). In such a system, the exponentially increasing complexity of interconnections, the daunting floods of data and the millisecond speed of the machines could easily overwhelm human decision-makers – unless some form of artificial intelligence can help manage the details and perhaps suggest courses of action. But what level of detail can we safely entrust to the AI, and what still requires human oversight?

Multi-Domain Operations, or All Domain Operations, envisions a new collaboration across land, sea, air, space, and cyberspace (Army graphic)

“It really comes down to our comfort with autonomy,” Groen said. “This is why we need to look beyond just the fires use case,” to the vast panoply of functions that a JADC2 network would need to serve.

“JADC2 is really the backbone” of the future force, but “how do you build it?” he asked. “Is JADC2 AI-ready?”

Groen and other officials emphasize that AI is not a secret sauce or magic potion you can just sprinkle on existing systems to make them better. It takes a lot of work to get a system to the point that JAIC calls “AI-ready.”

“It is not just, ‘do we have a missile ready on the launcher?’” Groen said. “AI-readiness means ensuring the data flow from thousands of sensors is going to be available….It means compute resources are available for algorithm development…It means an app-friendly environment that allows edge users to customize the data flows and their supporting tools.”

Getting good-enough data is the starting point – Groen’s precedessor, JAIC founder Lt. Gen. Jack Shanahan, once told me data is the raw material or “mineral ore” to build AI.

Modern machine learning AI relies on the fact that, in any large set of data, there will emerge clusters of data points that correspond to things in the real world.

As with physical raw materials, data comes in lots of different kinds, and you need to cross-reference carefully before you can (for example) fire at a suspected enemy unit in full confidence that they really are hostiles – not civilians or friendlies – and that you are really going to hit what you’re aiming at.

“Think about the things that feed solutions across the Joint Force,” Groen said. “Position data, timing data, threat location data, blue [i.e. friendly] location data, blue force status data, weather data, logistics data.”

In each case, he went on, before you build your AI, you have to ask and answer: “Who are the authoritative sources for those things to drive decision making? Do the authoritative sources for those types of data know they are the authoritative sources? Are they connected to this project? Do they understand what their responsibility is going to be?”

That kind of coordination is as much a human and bureaucratic problem as a technical one. And it’s not just the technicians designing and building an AI that have to think through these issues: It’s the commanders using it as well.

Those commanders can’t just delegate “all that digital stuff that I don’t want to worry about because it’s not warfighting,” Groen warned. “This is the business of commanders, not [just] network engineers… We need commanders to think thoroughly about their decision-making. What kind of decisions do they need to make? What data sources are they going to need?”

Helping AI laymen think through these complex questions has become a major mission for the Joint Artificial Intelligence Center.

“There are thousands of potential customers out there that don’t know how to spell AI,” Groen said. “They don’t know they need it.”

JAIC is now creating a cadre of experts and a cloud-based Joint Common Foundation to help organizations across the Defense Department grow their own AI. The JCF will provide “a starter set,” Groen explained. “If you think you have an AI use case, and you want to develop that, we’ll bring you expertise, [and on JCF] we’ll build you an enclave, we’ll host your data… We may help you with data labeling services, [and] the test and evaluation aspect.”

That doesn’t mean JAIC will sit in judgment on all military artificial intelligence efforts. “I don’t think that we’ll get to a space where the JCF does all the tests and evaluation for all of the AI instantiations across the department,” Groen said. “But… we’ve got just a top-notch team of AI testing expertise. What we can do is define standards and best practices.”