While AI is improving all the time, playing chess is small fry compared to actual war scenarios. (Westend61/Getty Images)

WASHINGTON — Chess is the world’s oldest wargame, and computers mastered it in 1997. Real war is vastly more complex, and machines still struggle to make sense of it. So while the machine-learning techniques that power today’s AI can crunch the numbers to win at chess — two identical armies of 16 pieces fighting over 64 squares — they cannot grapple with the ambiguity and chaos of actual combat.

That’s a problem for the Pentagon, which wants AI-driven “battle management” aides to help human commanders coordinate Joint All Domain Operations with dozens of ships, hundreds of aircraft, and thousands of troops maneuvering across land, sea, air, space, and cyberspace. So the Defense Department’s official agency for long-shot, high-payoff research, DARPA, contracted earlier this year with three companies to push past machine-learning to next generation of AI — something that could, at least in theory, help bring order to what DARPA calls “strategic chaos.”

“JADC2 [Joint All Domain Command & Control] does require a lot of planning,” said Aaron Kofford, a DARPA program manager. “That’s what SCEPTER’s all about.”

SCEPTER is short for the Strategic Chaos Engine for Planning, Tactics, Experimentation and Resiliency, where “Engine” is a deliberate nod to the game-playing programs known as chess engines, Kofford explained in an interview with Breaking Defense

“I’m a chess player,” Kofford said, and there’s a lot to learn from chess AI. Modern chess engines can not only beat human players, he emphasizes, they can also help them by analyzing their games, commentating, and advising on better moves. Similar AI tools for warfare help commanders and their staffs to plan a military operation.

“This is not replacing humans,” he emphasized. “This is a tool for humans.” (That’s in keeping with longstanding Pentagon policy and strategic culture that prefers a symbiosis between human and machine, rather than a SkyNet-style AI that controls everything like, well, pawns). “We are trying to improve the tools that humans have to help them evaluate situations.”

“Trying” is very much the operative word. “We’re still in the ‘can this possibly work?’ step,” Kofford admitted freely. “This is a pretty crazy idea.”

Why? Games like chess are amenable to computers because they are, as Kofford puts it, “well-bounded.” While the total number of possible positions is practically infinite, the available choices for each player on each turn are starkly limited and clearly defined. So algorithms can explore potential pathways step-by-step, then calculate which branching paths likely to lead to victory and which branches lead to loss and should be trimmed. (The technical term is “Monte Carlo tree search”).

But for a military force about to enter battle, or just leaving its home base for the front, the number of possible “next moves” is much larger and less precisely measurable than on an 8 by 8 grid. Even for an ordinary civilian getting up in the morning, there are incalculably many options, some trivial and some radical, from “make coffee, then go to work” to “go to work, then get coffee” to “quit my job, sell all my belongings, and go join a commune in Nepal.”

“There is an infinite number of things I could do next,” Kofford said, whichrequires cutting down the infinite possibilities to something the machine can manage.

To do that, it turns out, you need to revive an idea that predates machine learning, going back to what DARPA calls “first wave AI”: so-called expert systems, still used today for everything from tax preparation to medical diagnosis. Instead of unleashing the algorithm on a mass of data and have it trial-and-error through in search of correlations, you start with knowledgeable humans — experts — defining a set of options and rules for the machine to follow.

“I do think we can apply some expert knowledge to search some of the more important spaces and search them quickly,” Kofford said “faster than any individual human mind or any collective human council.”

The goal is to get the best of both worlds, a synergy of human understanding of the universe (as in old-school expert systems) and computer speed at processing big data (as in modern machine learning). But just like with the decision tree in combat, there are multiple ideas for how to get to that end result, and DARPA is open to different approaches. So the agency has awarded three contracts for SCEPTER — to research firm Charles River Analytics, non-profit Parallax Advanced Research, and behemoth BAE Systems — to explore three different paths.

Symbolic Reasoning: What Would Humans Do?

How do ordinary people cope with the everyday, real-world messiness that baffles the smartest machines? “What makes these problems solvable, for humans at least, is that humans are always applying a big bag of what you might call heuristics or approximations,” Charles River scientist Michael Harradon told Breaking Defense. “I don’t have to consider every possible thing.”

When you’re driving and deciding where to turn, for instance, you don’t calculate every possible angle from zero degrees to 180, you generally just choose left/right/straight. When tax prep software generates your return, Harradon explained, it doesn’t randomly allocate all possible numbers to all possible lines of Form 1040 and then apply machine learning to figure out which combinations are less likely to get you audited; It follows strict rules pre-programmed by human experts.

“Any time you are deducing something logically, any time you are producing a checklist, any time you’re making a plan,” Harradon explained, “those are all really forms of symbolic reasoning.” Computers may have a harder time coming up with checklists, but once it’s made they can check it off much faster than a human — or even than a machine learning algorithm trying to calculate every possibility.

So how do you create a big enough checklist to plan a battle? That’s where it gets tricky, Harradon admits. Instead of only using heuristics or only using machine learning, he says, you “take the best of both” and combine them, each feeding back to improve the other. In some areas, humans can input heuristics to tame a mass of data; in others, the algorithm can crunch the data, find correlations, and deduce its own rules of thumb.

“This field, it’s very much in its infancy,” Harradon acknowledges, “[but] there are a number of really good demonstrations” – including some in-house studies that Charles River isn’t ready to share.

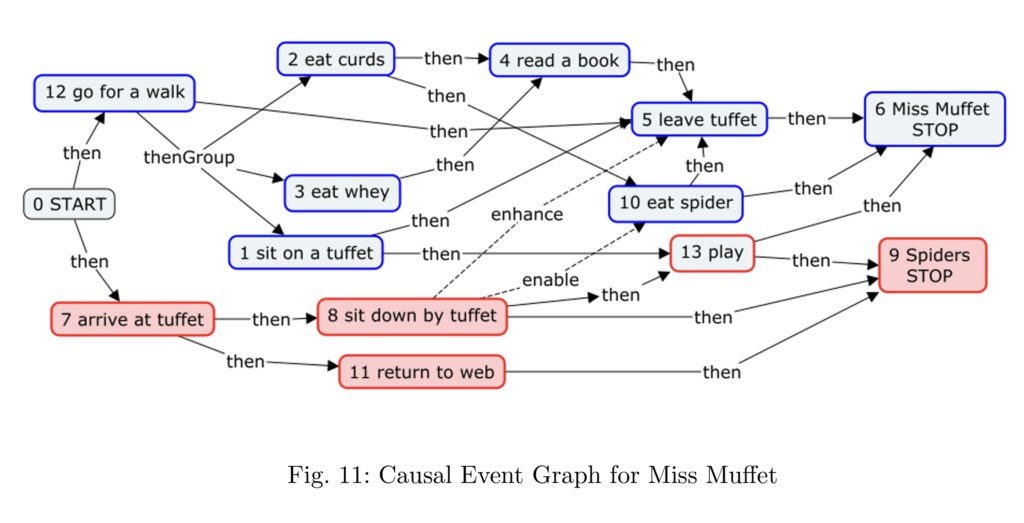

Causal Networks: Little Miss Muffet Sat Down On A Tuffet

In contrast to Charles River’s straightforward method of combining human expertise with computer calculation, Parallax’s approach is almost whimsical: It has people tell the AI stories, in flowchart form.

Consider Miss Muffet of nursery rhyme fame, Parallax scientist Van Parunak said in an interview. If she’s sitting on her tuffet, eating her iconic curds and whey (a form of cottage cheese), and along comes a spider… .does she run away? Keep eating? Smush the spider? Eat the spider? (That last option first occurred to Parunak in 5th grade). While Miss Muffet theoretically has infinite options — far too many for even a computer to analyze — in practice there’s a finite number of plausible, relevant responses to the situation.

Each of those responses leads in turn to a new situation, where there are new possible options — maybe now the spider tries to run away? — which lead to yet more situations with more options. By linking all these relatively simple options, step by step by step, you end up with a sophisticated map of possibilities, formally called a “causal network.”

Computer programmers already use this kind of story flowchart all the time: It’s the basic skeleton of many roleplaying games. Instead of analyzing every possible option, Parunak says, you simplify the problem to “When the player is in one particular state, what can you do next?”

“It has huge potential for military people who aren’t computer programmers, but they love to draw boxes and arrows,” he went on. “I met with many people in the IC [intelligence community] and whenever they wanted to explain a scenario, the first thing they would do is pullout a piece of paper or walk to a whiteboard and start drawing boxes and arrows.”

Of course, these flowcharts of options are more complicated than Miss Muffet’s and require more expertise to draw. One of Parallax’s military scenarios — which they can’t share with the public — was drafted by a retired Air Force master sergeant and includes almost a thousand discrete events.

The good news? Such military experts don’t need to learn to code in order to draw flowcharts. Even better? The AI doesn’t need to understand the story of the flowchart to analyze it, just understand that event A has options A1, A2, A3, etc. that lead to situations B1, B2, B3, and so on. Add positive and negative values to each situation, so the computer knows which ones to seek out and which to avoid, then set the algorithm loose to explore the possibilities over and over, learning optimal paths that might be apparent to a human looking at the flowchart.

“It’s a very simple decision process,” Parunak said, “which lets it run extremely fast,” over and over until the AI has enough data to make correlations.

Tailorable Abstraction: Simulating The Simulations

One lesson of chess engines is that if you let an AI play a game over and over enough times, it can learn to play as well as, or better than, a human. An while chess is too simple to be a realistic wargame, BAE scientist Marco Pravia told Breaking Defense, there are lots of better simulations available — many of them developed specifically for the Defense Department using detailed (often classified) data on real-world weapons systems.

“There is already a huge library of simulations that people have spent decades, in many cases, refining, [and] that planners already find already find useful,” Pravia said in an interview. So instead of running simulated chess games to train a chess bot how to win, you’re running the military-specific simulators over and over until the AI can start to see what patterns of choices lead to success.

Of course, Pravia acknowledged, there’s a catch: These military simulations are a lot more complex than chess, so they require a lot more computing power to run. While you can teach an AI to play chess through brute force trial and error, running millions of simulated chess games until it learns the statistical correlations between certain moves and checkmate, you can’t do that with military-specific simulations.

So BAE is taking a shortcut: Essentially, they’re simulating the simulations. Running a military simulator creates data that an AI can analyze. Give the AI enough of that data, and it can create a simplified version of the original simulation that captures the essential dynamics but abstracts away minor details. That simplified simulation-of-a-simulation — what BAE calls a “tailorable abstraction” — takes much less computing power to run, so you can run it over and over and over again, creating enough data for machine learning algorithms to analyze that output in turn.

But, when you’re simplifying the original simulation into the “tailorable abstraction,” how do you know you’re only getting rid of trivial details, not essential ones? That’s a judgment call, Pravia acknowledged, one that must be made by expert humans.

In essence, it’s a layer cake of AI and human expertise: Humans create the underlying simulations, AI generates the simulations of the simulations, and humans tune those tailorable abstractions to meet specific purposes.

And how will DARPA evaluate if any of these three approaches works? By feeding the results back to human beings.

“We’re having [military] planning experts look at the outputs and say, ‘actually, that was kind of useful’” – or not, Kofford said: “Ultimately, it’s going to be up to planners to let us know if we’re wasting time or helping them.”