DENVER: The Pentagon wants a combat network that can suck up sensor data from across the battlefield in seconds and automatically match targets with the best weapons to strike them, like Uber pairing passengers to drivers. But generals and civilian officials alike warn that AI-driven command and control must leave room for human judgment, creativity, and ethics. The question is how to strike that balance.

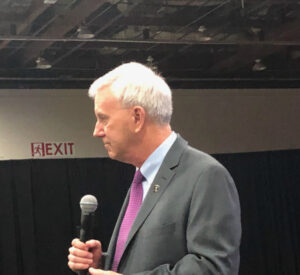

“You’re going to provide your subordinates the best data you can get them, and you’re going to need AI to get that quality of data,” said recently retired Gen. Robert Brown, “but then that’s balanced with, they’re there on the ground.” Military leaders must make their own judgment calls based on what they see and hear and intuit, not just click “okay” on whatever battle plan the computer suggests, as if they’re paging through the latest Terms & Conditions boilerplate on their smartphone.

“People are wrestling with this across the board [in] business, academia, certainly the military,” Brown told the Association of the US Army’s AI conference here. He’s wrestled with it personally, a lot: As the four-star commander of US Army Pacific, Brown says he oversaw “literally hundreds of exercises, wargames, experiments, simulations working on Multi-Domain Operations,” the military’s emerging concept for future conflict across the five domains of land, sea, air, space, and cyberspace.

Brown’s bottom line: “It’s an art and a science.”

“You’ve got to take the analytics and leverage them,” he explained, neither ignoring them nor trusting them blindly. “In some cases — if you’re certain, you feel very confident in the information, and you know enough to know that the data is that good — that might be the 75 percent of your decision and the art is 25. On the other hand, it may be just the opposite.”

To help commanders know when to trust the AI and when not to, “any information that the machine is telling us should come with a confidence factor,” suggested another senior officer at the conference, Brig. Gen. Richard Ross Coffman. He leads work on the future armored force, which will include both an Optionally Manned Fighting Vehicle and a family of Robotic Combat Vehicles.

“It should be reliable, and that reliability should build trust,” Coffman said of automation. How reliable? At least “as good as a human,” he said, “which is seemingly a very high standard — until you get a human behind a gunsight and have them scan, and what we find is, they don’t pick up 100 percent. It’s somewhere between 80 and 85 percent.”

In other words, neither the human nor the machine is infallible. The trick is making sure each can catch the other’s errors, instead of making each other worse – a form of artificial stupidity behind everything from friendly-fire shootdowns to Tesla crashes.

The more your AI has to make do with data that is unreliable, incomplete, or even deliberately falsified by a savvy adversary – and it will be all three of those things, to some extent, in the fog of a war with Russia or China – the less a commander can trust the AI’s analysis. It’s worth noting that the four-star chief of Air Combat Command said he still didn’t trust the Pentagon’s Project Maven AI – to which Maven’s former director freely admitted the early versions were right a little more than half the time.

But at the same time, the faster the fight moves, the greater the number of things happening at once – from missile launches to bot attacks — the harder it is for human minds to keep up, and the more they may have to rely on the AI. “You certainly can’t lose that speed and velocity of decision that you need,” Brown said.

‘Thousands of Kill Chains’

At the Denver conference, the Army’s argument about the limits of AI got agreement even from the most technophilic service, the Air Force.

“We can’t lose the creativity of the human mind,” said Brig. Gen. David Kumashiro, director for joint integrator for the Air Force Chief of Staff, who spoke alongside Brown at the conference.

So how do you actually input that human factor into an AI system?

“Go back to what General Brown mentioned about both the art and the science — the science being those analytic tools sets on top of the data, and also the outputs of the algorithms that you can compare and contrast and make sense of,” Kumashiro said. “The art piece is that human, at a minimum on the loop, to be able to look at that data and the results of [the] decision-making tool or algorithm, [and asking], ‘does this make sense?’”

The problem is that future conflicts may move too fast for human decisionmakers to keep up. Indeed, they already do in some important areas, from cybersecurity against massed attacks by botnets to defense against salvos of incoming missiles. Now scale up that problem for an theater of operation where an advanced nation-state like Russia or China – without US compunctions about human control – may have unleashed thousands of automated weapons at once.

“When we look at thousands of kill chains” – that is, the whole process from detecting a potential target to identifying it to destroying it, multiplied by thousands of targets – “what does that mean for us as humans in the loop to be able to comprehend and react to that number of threats to us?” Kumashiro asked. “What does it mean to articulate mission command in that environment?”

Mission command, the traditional US doctrine, says leaders should give their subordinates a crystal-clear vision for what they’re trying to achieve but also provide them significant leeway in how to achieve it. After all, intelligence reports are never entirely accurate, battlefield conditions constantly change, and communications with higher headquarters often fail, forcing junior leaders to take improvise without waiting for new orders.

But how does that change when at least some decisions must be automated, and when all decisions could benefit from AI advice? In that case, Kumashiro suggested, at least part of “the understanding, the intent, and the trust of the joint force commander” could be embodied “algorithmically, in an AI program.” That could mean, in addition to giving instructions to their human subordinates, commanders could also adjust the parameters for everyone’s AI advisors.

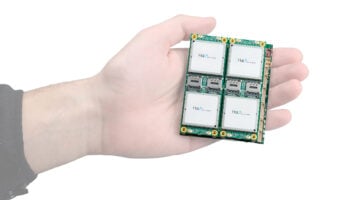

At the same time, Kumashiro continued, it’s vital to train subordinates to make their own decisions when access to the network is cut off. Even if future frontline leaders have a portable AI advisor on their vehicle or in their pocket, its value will be sharply limited when it loses access to up-to-date data from other units. The future force will need “operating concepts and TTPs that allow us to operate [even] when we are disconnected from the hive,” he said, “[so] the warfighter at the farthest reaches of the tactical edge can still perform what they need to do.”

“We have to practice it, we have to exercise it,” said Kumashiro, who’s helping run new Air Force command & control experiments every four months. “We can’t say, ‘hey, let me just do this annual wargame’ and that’s it. We have to continually iterate and learn.”

The Limits of Human Control

There’s a crucial unanswered question in all of this debate. Can human beings really control AI and automation? That not as simple as giving a human being an “off” switch, especially if the automated process is too fast and complex for them to understand.

This isn’t just a tactical consideration, but also a legal, moral and strategic one, tied to America’s increasingly contested status as an international leader on ethics. “When American soldiers go to war, we bring our values with us,” said James McPherson, the civilian currently performing the duties of the deputy secretary of the Army. “[Artificial intelligence] will revolutionize warfighting by maximizing efficiency and minimizing risks to our soldiers, but in accordance with the rule of law, US commanders will always retain full responsibility for the weapons they employ.”

“The challenge is developing AI and weapons systems that deploy AI, [that allow] that a human intervenes to ensure we retain that moral high ground, McPherson said. “There will always be a human in the kill chain.”

Then again, maybe the human will be only “on the loop,” as Brig. Gen. Kumashiro put it. So it’s worth unpacking these concepts in plain English.

A human being is in the loop if the automation cannot act – say, jam an incoming missile or drop a bomb – without human approval. A human being is on the loop if the automation can take action on its own – as happens even today in areas like short-range missile defense, where there’s no time for a human to react – but the human monitors what’s happening and can override the automation or shut it off.

In practice, there’s a whole spectrum of grey areas between these black-and-white distinctions. For example, the Navy’s new Long-Range Anti-Ship Missile is launched by a human who gives it a specific target. But when LRASM reaches the target area, it is up to the missile’s own computer brain to sort through jamming, decoys, and other ships – potentially including civilian vessels – so it can find that target and strike it. At each phase of this particular kill chain, when the human in the loop, on it, or off?

The idea of weapons that make their own decisions is deeply unnerving to both military and civilian leaders in the Pentagon.

“I don’t want an autonomous vehicle,” said Brig. Gen. Coffman. “I want that vehicle to do exactly what I say is okay or not okay.”

“We are not looking to build a Terminator,” Coffman emphasized to the conference. “We are looking to build something that can operate without a human on board and accomplish our mission.”

Coffman’s goal may seem straightforward. But in practice, it’s impossible to predict how any automated system will perform in all possible situations. That’s true even with traditional, deterministic IF-THEN code. It’s even more true with machine learning, which continually modifies its own code as it learns, often in ways that baffle its developers, let alone users.

Today, the armed forces require exhaustive testing before they field new equipment, noted Ryan Close, director of air systems in the Army’s famed night vision lab. “When someone signs off on ‘this is deemed safe’ … there is a lot of hesitancy to accept risk without being able to understand every contingency,” he said.

But, “as we move into AI-type algorithms… there’s always unknown unknowns,” Close warned. “There’s always things that can happen that it’s almost impossible to test against — definitely impossible within a constrained budget and schedule.

“The Army has to make some difficult decisions, if they’re going to back off some of the current testing and safety guidelines and metrics to allow some of these developments to get out in the field faster,” he said. “We have to keep up with adversaries that don’t follow our ethics and constraints.”