A “Project Origin” Robotic Combat Vehicle surrogate conducts a live-fire exercise in 2021. (Army photo)

WASHINGTON — Cost overruns and schedule slips abound in the defense business, but at least the Pentagon has plenty of experience building physical weapons. By contrast, training AI algorithms takes very different skills, which the Defense Department and its traditional contractors largely lack.

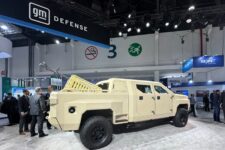

That’s why it’s worth noting that, this morning, a small Silicon Valley company called Applied Intuition announced it had won a contract — brokered by the Pentagon’s embassy in the Valley, the Defense Innovation Unit (DIU), using its streamlined Commercial Solutions Opening process — to provide software tools for the Army’s Robotic Combat Vehicle program (RCV). (This contract complements the Army’s existing deals with Qinetiq and Textron to build prototypes). While pocket change by Pentagon procurement standards, with a maximum spend of $49 million over two years, Applied’s contract could help bring private-sector innovation in self-driving vehicles to the armed forces.

“Commercial industry has a leg up on this, because they’ve invested a tremendous amount into these efforts,” said David Michelson, a former Army infantryman who now manages autonomous systems for DIU. “The industry also understands how to deploy this stuff and how to get these systems and software actually out in the real world.”

The Defense Department can’t just hire a contractor to deliver a box of software and then walk away, Michelson emphasized in an interview with Breaking Defense. Military robotics need the same kind of comprehensive development and sustainment pipeline that Tesla, for example, uses to suck in petabytes of data from the sensors on its vehicles, analyze it, and employ it to refine its algorithms — and do it over and over and over, day after day after day, throughout the service life of a program.

RELATED: EXCLUSIVE: Outgoing DIU head ‘frustrated… we’re not supported’ more by big Pentagon

“The DoD just hasn’t had that infrastructure in place,” Michelson said. “They don’t have good systems for harvesting that data, managing it, storing it, manipulating it, and sharing it.

“That’s new for a lot of acquisitions offices out there; they haven’t really had to think about the software development pipeline,” he said. “When you’re fielding a tank, you’re thinking about the logistics tails and the maintenance for it, actually having parts on hand, and the training that goes into that… There’s no thought of software development pipelines, updating algorithms.”

But the Defense Department has to start somewhere — and RCV, Michelson said, is “the pathfinder for the Department.”

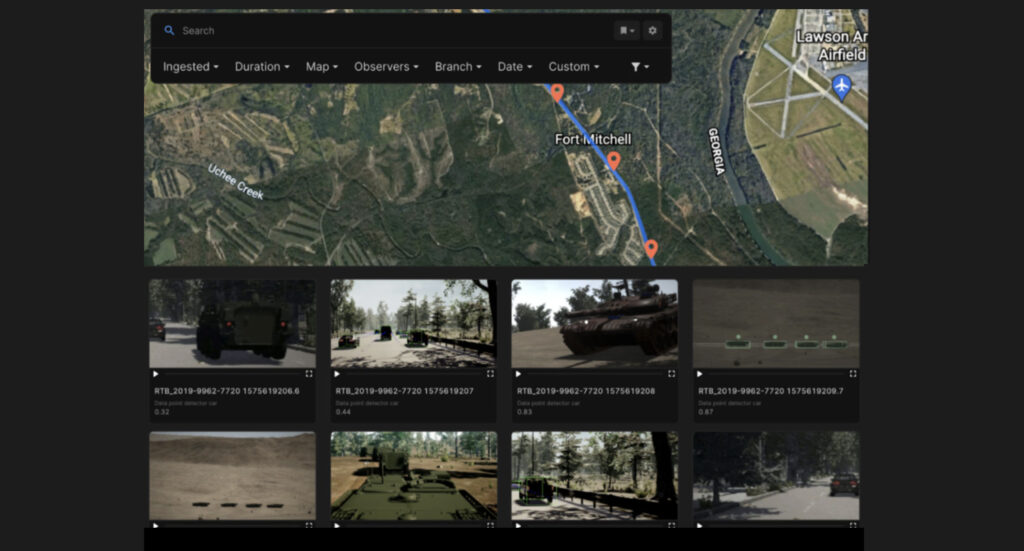

Applied Intuition’s “Strada” software lets developers analyze the logs of an unmanned vehicle’s movements. (Applied Intuition screenshot)

‘You Need A Tremendous Amount Of Data On The Ground’

Michelson’s team at DIU had hitherto focused on getting off-the-shelf civilian mini-drones approved and available for military use, such as the Skydio RQ-28A quadcopter entering Army service this fall. Now Michelson is taking on the Army’s Robotic Combat Vehicle. But RCV is a much more complex challenge, because it has to move on land.

“There’s not a lot you’re going to hit in the sky,” Michelson said. “In the air, you can deal with several seconds of latency [between spotting an obstacle and reacting]. On the ground, if you have several seconds of latency, good luck. You’re going to run into a lot of different issues” — sometimes, literally run into them.

Even the latest driver-assistance algorithms still struggle to recognize moving objects — pedestrians, bicyclists, other vehicles — in time to hit the brakes. And AI gets even harder when you go offroad, said Colin Carroll, an intelligence officer in the Marine Corps Reserve who headed Project Maven and helped run JAIC before joining the government relations office of Applied Intuition.

RELATED: ‘Lightning in a bottle’: Inside the ‘Origin’ of the Army’s future robotic fleet

“On-road you have lanes, you have markers, there’s a set rule of the road that everybody, in theory, is following around you,” Carroll said in an interview. “If you hit something, that is a fail, doesn’t matter what it is: a [traffic] cone, a person, another car, a tree.

“Off-road, though, you might want to hit something,” he continued. “If you’re in a tank, you can hit a tree and just run it over.”

So vehicles that drive themselves off-road not only need to recognize potential obstacles, as on-road AIs do; They must also decide what they must drive around and what they can safely drive through. That depends on the construction of the vehicle, the nature of the obstacle, and the physics of how they interact. Can the robot brush aside that low-hanging branch, or will it break something? Can it drive through that mud, or will it get stuck? Is that water ahead an inch-deep puddle, or a pond?

The private sector is investing heavily in this problem, too. “There is quite a lot of money going into offroad mobility,” said Carroll, “[from companies like] Kamatsu, Caterpillar, John Deere.”

Simulated LIDAR returns in Applied Intuition’s “Spectral” software (Applied Intuition graphic)

But military vehicles face an additional layer of complications that commercial offroad autonomy doesn’t. Most obviously: Someone is out there trying to kill you. That means military maneuver, as opposed to mere movement, has to take into account available cover and open ground. A “safe” path, in a military sense, requires not only navigating around obstacles, but calculating lines of sight from potential enemy positions so you can avoid driving through their kill zones. A robot on a reconnaissance mission, or an armed one, also needs to position itself to maximize its own field of fire, which requires guessing at where the enemy will be.

More subtly, a military vehicle may not set out with a specific destination defined in precise coordinates. Instead, it may have a broader mission — “search and destroy,” for instance, or “patrol this area” — that requires defining new destinations on the fly to avoid threats or hunt potential targets.

“I’m not aware of … a single one of our [commercial] customers that’s [saying] ‘we’re going to go for a drive today and we don’t know where we’re going to wind up, and the machine’s going to figure that out,’” Carroll said. “No one’s investing commercial dollars in figuring that out.”

Penetrating this kind of fog of war requires much more sophisticated algorithms crunching even more data than civilian offroad navigation.

All told, Michelson said, “you need a tremendous amount of data on the ground.”

Applied Intuition’s “Spectral” software generates virtual environments to test AI perception algorithms. (Applied Intuition screenshot)

Let’s Get Digital, Digital

The good news is that you do not need to build an inch-by-inch digital map of everywhere you want your robotic ground vehicles to go. (Even if you could, it would be immediately outdated in a war zone, where explosions can change the landscape in an instant). Instead, Michelson and Carroll explained, you can use your data to teach your AI to navigate in enough specific situations that — like a human infant — it learns to generalize and can start handling unfamiliar environments on its own.

What’s more, they argued, AI can do a lot of that learning in a simulation. By creating a detailed virtual environment and feeding the algorithms the kind of data their sensors would see in the physical world, you can run through the hundreds of repetitions required at a fraction of the time and cost involved in real-world driving — and with zero risk of accidents. Simulation is especially useful for testing collisions and other hazards that would destroy a physical prototype.

“There’s no substitute for real data,” Michelson cautioned, “[but] once you get digital, you can do it over and over and over.”

Doing it over and over and over is essential, because you can’t stop with software version 1.0. Autonomy developers start off testing their vehicles in small, self-contained environments before gradually exposing the algorithms to greater variety and complexity: bad weather, nighttime lightning, different types of terrain, different regions of the world.

Testing sensors in different simulated weather conditions using the “Spectral” software (Applied Intuition graphic)

“It’s not like Lord of the Rings, where there’s one algorithm to rule them all,” Michelson said. “You can simultaneously train multiple ‘stacks’ — and a stack is the full pipeline of autonomous vehicle software.”

This AI training doesn’t stop with the delivery of the initial software, any more than the supply of spare parts stops when the first physical vehicle is delivered. Updates must continue through the software’s service life as it takes on new missions in new regions in a changing world. The military might even need to systematically conduct mission-specific data-gathering and software updates before deploying robots abroad, just as units today conduct “intelligence preparation of the battlefield” and pre-deployment training. And it would need to update its AI with new data after every battle, just as human troops conduct After-Action Reviews.

It’s a huge endeavor. The mechanized armies of the industrial age lived — or died — on a constant flow of fuel, spare parts, and ammunition. The unmanned armies of the AI era will require a constant flow of data, from the frontline back to the developers, and software updates from the developers to the frontline.

“If you have a bunch of robots out in the field in an operational environment but you have no way again to get that data back, you won’t learn why it’s failing, if it’s failing,” Michelson said. “If you think that you’re going to carry around a bunch of USB sticks for updates and put them in every single robot, that’s just inefficient.”

As DoD shifts $50B in spending, can an old playbook protect legacy programs?

Running through potential winners and losers under Defense Secretary Pete Hegseth’s move to shift $50 billion towards different priorities in fiscal 2026.