Pentagon grapples with growth of artificial intelligence. (Graphic by Breaking Defense, original brain graphic via Getty)

WASHINGTON: The Defense Department is updating its guidance on autonomous weapons to consider advances in artificial intelligence, with a revised directive slated for release later this year, the head of the Pentagon’s emerging capabilities policy office told Breaking Defense in an exclusive interview.

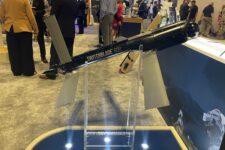

DoD directive 3000.09 [PDF], signed by then-Deputy Secretary of Defense Ash Carter on Nov. 21, 2012, established policy, responsibilities and review processes for the “design, development, acquisition, testing, fielding, and employment of autonomous and semi-autonomous weapon systems, including guided munitions that can independently select and discriminate targets.”

But in the decade since its release, artificial intelligence and machine learning technologies have made a massive leap forward, and it’s “entirely plausible” there may need to be revisions that reflect the Pentagon’s “responsible AI” initiative and other ethical principles adopted by the department, said Michael Horowitz, DoD director of emerging capabilities policy.

“Autonomy and AI are not the exact same thing,” Horowitz told Breaking Defense on May 24. “But given the growing importance that AI plays, and thinking about the future of war and the way the department has been thinking about AI, I think ensuring that’s reflected in the directive seems to make sense.”

It’s important to note that, based on the definition inscribed in DoD directive 3000.09, the military currently does not operate any weapon systems that qualify as an autonomous weapon — and at least publicly is not currently developing such a weapon. The department characterizes an “autonomous weapon” as an autonomous or semi-autonomous system that can choose its own targets and apply lethal or nonlethal force without a human in the loop.

The directive does not apply to unarmed drones or armed drones like the MQ-9 Reaper, whose flight path and weapons release is controlled by a human pilot sitting at a remote location. It also doesn’t apply to systems like the Switchblade loitering munitions the US has provided to Ukraine, which are programmed by a human operator to hit specific targets and can be called off when needed.

“It was the first national policy published on autonomous weapons systems, and actually remains one of the only publicly available national policies,” Horowitz said. “It set the standard essentially for the global dialogue that followed and demonstrated America’s responsible approach to the potential incorporation of autonomy into weapon systems.”

In a 2012 interview with Defense News, David Ochmanek, then the deputy assistant secretary for policy force development, described the doctrine as “flexible” and stressed the imposition of a “rigorous review process” that would now be in place before any future autonomous weapon could be approved.

But that promise has done little to assuage opponents, who raise comparisons to Terminators and have organized into efforts, such as the eponymous Campaign to Stop Killer Robots, to preemptively ban the technology. Horowitz — a longtime drone expert who once authored a paper titled “The Ethics & Morality of Robotic Warfare: Assessing the Debate over Autonomous Weapons” — is well aware of the debate around such systems, and while avoiding commenting on those concerns directly, he noted that the department’s increased focus on autonomy and AI in recent years has always been with the idea of a human being involved in the process.

“I would say the one of the things about the approach of the United States to the role of AI and autonomous systems has been imagining these systems as a way to enhance the warfighter,” he said. “It’s why, dating back a couple of administrations, the United States has talked about things like human-machine teaming, because it tends to think about AI and autonomous systems as things that work synergistically with the best trained military in the world to improve its capacity.”

Modernized AI

The update is occurring not because a major technological breakthrough is on the horizon, but because of a department standard that requires directives be updated every 10 years. Right now, it’s unclear exactly how much of the original directive will need to be revised, but Horowitz seemed to downplay massive rewrites.

“Our instinct entering this process is that the fundamental approach in the directive remains sound, that the directive laid out a very responsible approach to the incorporation of autonomy and weapons systems,” Horowitz said.

“But we want to make sure, of course, that the directive still reflects the views of the department and the way the department should be thinking about [autonomous] weapon systems,” he continued. “You know, it has been a decade. And it’s entirely plausible that there are some updates and clarifications that would be helpful.”

Horowitz declined to go into details about where he thinks changes may be needed, but did highlight that the document reflects the Pentagon of 2012, which has morphed over the course of the Obama, Trump and Biden administrations. For instance, the review process laid out in the original directive references the Under Secretary of Defense for Acquisition, Technology, and Logistics — a position that no longer exists, whose responsibilities are now split between the Undersecretary of Defense for Acquisition and Sustainment and the Undersecretary of Defense for Research and Engineering.

He also underlined that this directive would be focused on the specific subject of autonomous weapons, and not the broader AI efforts that exist throughout the department.

“When this directive was published in 2012, the notion of the way that algorithms, how algorithms might impact the military seemed pretty futuristic, or seemed further away. And autonomous weapon systems were a specific thing that the department chose to write a directive about,” he continued. “I think it’s important that the department consider the way that should then also influence this directive … given the intersection between AI and autonomous systems, and I say autonomous systems as opposed to autonomous weapon systems deliberately.

“There’s so many AI applications that can or are already influencing the American military and will influence the American military that, you know, that have nothing to do with this.”

While Horowitz’s office — only recently established — will seek out input from the relatively new defense organizations that have been stood up in the past decade, such as the office of the Chief Digital and Artificial Intelligence Officer (CDAO), other organizations that may be relative to the revamp of DoD Directive 3000.09—the Joint Artificial Intelligence Center, Defense Digital Service and Office of Advancing Analytics—are slated to become part of the CDAO office on June 1, Breaking Defense reported earlier this week.

He also expects to get inputs from the services, Joint Staff and other stakeholders — of which, he noted, there are significantly more now than a decade ago.

Jaspreet Gill in Washington contributed to this report.