Army MRAP (Mine-Resistant Ambush-Protected) armored truck with roof-mounted CROWS (Common Remotely Operated Weapon Station)

WASHINGTON: The Army is actually field-testing artificial intelligence’s ability to find targets and aim weapons for human troops. After decades of debate and R&D, the technology is being used in one of the harshest environments imaginable: the Yuma Desert.

In Army Futures Command’s Project Convergence experiments at Yuma Proving Grounds an artificial intelligence known as Firestorm is warning ground troops of threats, sending them precision targeting data, and in some cases even aiming their vehicles’ weapons at the enemy, the Army’s director of ground vehicle modernization says in an exclusive interview.

Brig. Gen. Richard Ross Coffman during a Robotic Combat Vehicle demonstration.

But when I suggested it might be technically possible to have the AI fire the weapons by remote control as well, Brig. Gen. Richard Ross Coffman told me emphatically: “That is nowhere on the scope. We are committed to having a human in the loop.”

Firestorm takes in data from satellites, drones, and ground-based sensors, Coffman explained. The AI continually processes that data and sends its conclusions over the Army’s tactical wireless network – “Firestorm is the brain,” he said, “the network is the spine” – to command posts, drones, mortar teams, and combat vehicles from Humvees to M109 armored howitzers.

That data flow serves multiple functions, each with a different balance of automation and human intervention.

First, the data automatically populates all the digital maps in command posts, vehicles, and soldiers’ handheld devices with current locations of friendly and hostile forces.Troops normally have to check multiple systems to piece together a picture of the battlefield, with each unit often having a single, partial view. The new system provides a single Common Operational Picture (COP) to help troops coordinate maneuvers, avoid friendly fire, and locate enemies long before they can physically see them, allowing them to avoid the foe or set up an ambush. (This is a vision first explored in the late 1990s during the Army’s Advanced Warfighting Experiments, but they didn’t have AI or software radios or or or….)

A Samsung Galaxy loaded with the military’s ATAK command-and-control software

The goal is for the AI to empower troops, not micromanage them, Coffman said. “It will allow the soldiers on the battlefield to see beyond their line of sight, with manned and unmanned ground and aerial sensors,” he told me. “It allows our forces to engage our enemies at the point and place in time of their choosing.”

(This kind of God’s-eye view of the battle was the vision for the Army’s Future Combat Systems program, whose awkward slogan was “see first, understand first, act first and finish decisively.” But FCS could never get the network to work and was cancelled in 2009. 11 years of technological progress, driven not by the military but by Silicon Valley, have made a dramatic difference).

Second, Firestorm’s algorithms can also prioritize among potential targets, calculate which units are best equipped and positioned to engage them, and pass targeting information directly to their weapons’ fire control systems. Those include the artillery’s AFATDS and the computerized Remote Weapons Stations (RWS) installed on many Humvees and MRAPs since 9/11. At Yuma, those readily available and relatively inexpensive 4×4 tactical trucks and their RWS are standing in for future Robotic Combat Vehicles and Optionally Manned Fighting Vehicles that Coffman’s team is still developing.

The Common Remotely Operated Weapons Station (CROWS) allows soldiers to see through sensors and fire the roof-mounted gun while staying inside their armored vehicle.

A Remote Weapons Station gives a gunner inside the vehicle screens and a joystick, allowing him to see through external sensors, aim the roof-mounted weapon, and fire without ever having to expose himself in an open hatch. Since weapons installed this way are already aimed by electronic controls and actuators, rather than by human muscles or hydraulics, Firestorm’s digital signals can actually bring the gun to bear on the target, a capability called “slew to cue.”

But there it stops, Coffman emphasized. “The human has to pull the trigger,” he told me. “He makes the call, based on [what he sees in] his sights.”

The current set-up also requires human approval of targets being passed to artillery and other “indirect fire” weapons, whose gunners never lay eyes on the target, Coffman said: “As the enemy forces are detected, a human must confirm that those are enemy or a threat before a[n indirect] fire mission or direct fire engagement is conducted.”

Firestorm isn’t the only artificial intelligence getting a workout in Project Convergence. In fact, it isn’t even the only AI working to connect “sensors and shooters.” Instead of a single centralized Skynet trying to mastermind (or micromanage) operations, with all the rigidity and vulnerability that implies, the Army is looking at a federation of more specialized, less ambitious AIs, each assisting humans in different ways.

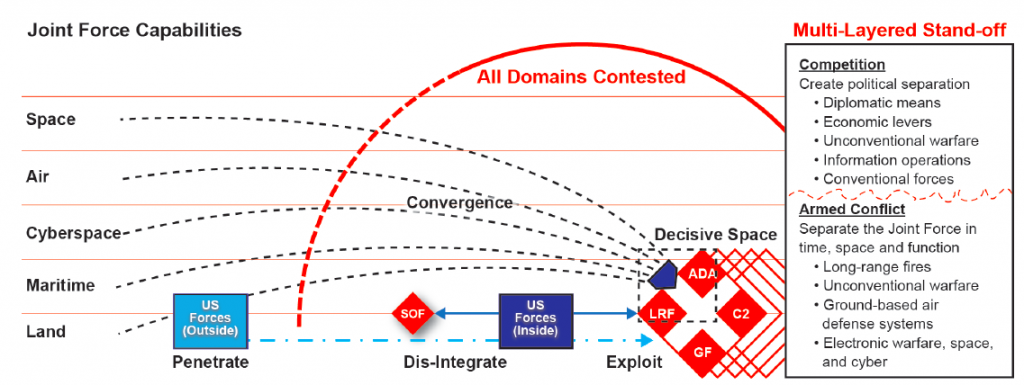

SOURCE: Army Multi-Domain Operations Concept, December 2018.

Firestorm is the tactical AI “brain” at Yuma itself, Coffman explained: It links sensors to shooters at the level of an Army division and below.

But there’s also an AI hub at Joint Base Lewis-McCord in Washington State – over 1,300 miles north of Yuma – which is testing the Army’s ability to coordinate strategic and operational-level fire support over a whole theater of war. That’s a crucial capability for the service’s planned arsenal of long-range missiles.

While the two AI systems are distinct, they are not truly separate, Coffman said. Both draw on some of the same data sources. Both contribute to that Common Operational Picture that troops from HQ to foxhole can see on their digital maps. And both systems have proven their ability to accelerate the kill chain from detecting a target to destroying it, he said: “We’re collecting the data out here at Yuma to get an exact number, but we’re talking about going from tens of minutes to seconds.”