Mr. Young Bang, Principal Deputy Assistant Secretary of the Army (Acquisition, Logistics and Technology,) addresses the moderator and members of the panel during the Under Secretary of the Army’s Digital Transformation Panel at the Walter E. Washington Convention Center in Washington, D.C., Sept. 10, 2023. The event was in support of the AUSA 2023 Annual Meeting and Exposition. (U.S. Army photo by Henry Villarama)

WASHINGTON — The Army wants the industry’s advice on how to build a “layered defense framework” for artificial intelligence that will let it adopt commercial AI safely, securely, and swiftly.

Called #DefendAI, a name officials said was suggested by ChatGPT, the scheme calls for extensive testing and tailored defenses for AI intended for highly sensitive systems, like weapons, tactical networks and aircraft flight controls, while allowing more relaxed procedures for more mundane backoffice applications, where a hidden backdoor or poisoned data would not have such severe consequences.

The details, however, are very much to be determined, and Army officials rolling out the plan on Wednesday emphasized they are eager for feedback and willing to make changes. The whole point of #DefendAI is to make it easier to tap the expertise and innovation of the private sector, said Young Bang, the principal civilian deputy to Army acquisition chief Doug Bush and the leader of many of the service’s software reforms.

“We really do appreciate industry support here. We do need your help,” Bang emphasized to a contractor-heavy audience at the Defense Scoop DefenseTalks conference Wednesday morning. “As I’ve been saying since I got here, we’re not going to do this better than you, we’re going to adopt what you have. This framework is going to be an attempt to really help us accelerate the adoption of third-party-generated algorithms.”

Bang wants industry input in two areas. First, he welcomed sales pitches for specific “processes and tools” the Army could buy to help implement the layered defense framework. “Y’all are in the business of making money,” he said. “We get that.”

In fact, Bang said, the service has already launched a prize competition for small businesses that will feed into the #DefendAI effort, the 2024 Scalable AI contest run by an Army outreach office, xTech. (Submissions for this round are already closed, with judging to take place at the annual AUSA mega-conference in October). Two of the three Scalable AI topics for this year focus on “automated AI risk management” and “robust testing and evaluation of AI.”

But beyond such specific tools, Bang went on, the Army also wants industry’s advice on the overall framework itself. “We’re going to be transparent,” he said. “You’re going to have opportunities to give us feedback about this framework so we’ll be able to adjust it.”

The service will issue formal Requests For Information this summer, said Bang and his chief aide for software, Jennifer Swanson. “The first one should probably go out in, hmmm, July?” Swanson said tentatively.

The #DefendAI framework those RFIs describe will be very much a work in progress and open to change, Swanson emphasized. “We’re not going to send you a finished product,” she said. “We’re going to send them [RFIs] as it evolves, so that you can help us to build it, just like we did with the Unified Data Reference Architecture.”

UDRA is how the Army aims to handle the essential groundwork for robust AI: well-organized and accessible data. Version 1.0 came out in March after almost 18 months of revisions, many based on private-sector suggestions.

“The Unified Data Reference Architecture doesn’t look anything like it did a the beginning, largely because to feedback we got from industry,” Swanson said — proof that “we do take your feedback.”

That’s not the only such example: When industry objected to submitting exhaustive and potentially burdensome “Artificial Intelligence Bills of Materials” for its algorithms, Bang backed off the “AI-BOM” idea and refocused to building up the Army’s own testing capabilities instead. That pivot is an important bit of context for the new #DefendAI effort.

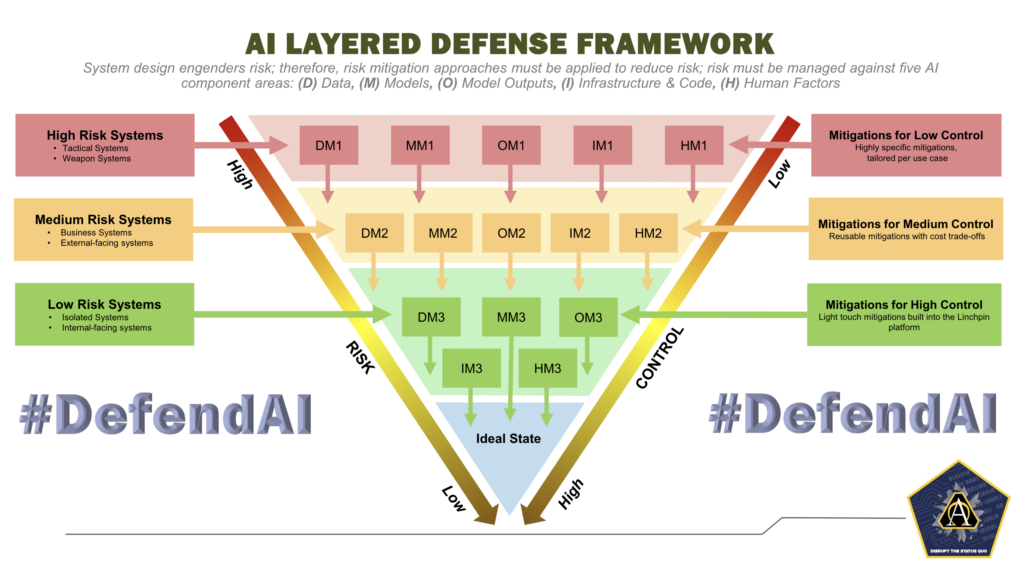

The Army’s “#DefendAI” scheme for safely and swiftly adopting commercial artificial intelligence systems. (Army graphic)

What Bang and Swanson presented today, she said, was a “top level” overview of the framework, depicted graphically as an upside-down pyramid in which AIs are funneled from “high risk” at the top to “low risk” at the bottom. The more sensitive the system, the more layers of controls it must pass through before being deemed sufficiently secure.

“Tactical [and] weapons systems” are listed as examples of the highest-risk AIs, where an error or security vulnerability could lead to loss of life, so they will require “highly specific [security] mitigations, tailored [to specific] use case.” “Business systems” — accounting, personnel management, and so on — and “external-facing systems” — such as DoD websites — are in the “medium risk” category, so they can probably get by with less customized security measures, such “reusable mitigations” applied across multiple similar AIs. Finally, “low risk systems” include those that are “isolated” from wider networks or “inward-facing” to DoD-only audiences: These may only require “light touch mitigations” of the kind already built into the Army’s Project Linchpin development platform.

The goal is not a laborious, time-consuming process, Bang emphasized, but one that moves swiftly and, wherever possible, uses automation to replace slower-moving traditional processes that rely on overwhelmed humans and written checklists. “The intent is to automate all this,” he said. “The intent is to make this fast so we can actually adopt your algorithms faster.”

Risk can’t be eliminated, Bang acknowledged, but it can be mitigated, managed and reduced. “We recognize that there is going to be risk associated with everything,” he said. “We’re just trying to reduce the risk.”

US falls further behind in AI race, could make conflict with China ‘unwinnable’: Report

“It is well past time for DoD to stop treating AI like it is just a science project,” Govini CEO Tara Murphy Dougherty said.